Show summary Hide summary

- How the alleged leaked OpenAI Super Bowl ad exploded

- The multipronged hoax campaign behind the viral “reveal”

- OpenAI’s response and the quick dismantling of the hoax

- What this hoax reveals about AI hype and user psychology

- Practical checklist: How you can verify the next viral tech “reveal”

- Was the alleged leaked OpenAI Super Bowl ad real?

- Did OpenAI plan to launch hardware like the earbuds and shiny orb shown?

- How did investigators discover that the Reddit user was not an OpenAI employee?

- Why did the hoax include fake headlines and a cloned news site?

- How can I avoid spreading similar advertising hoaxes in the future?

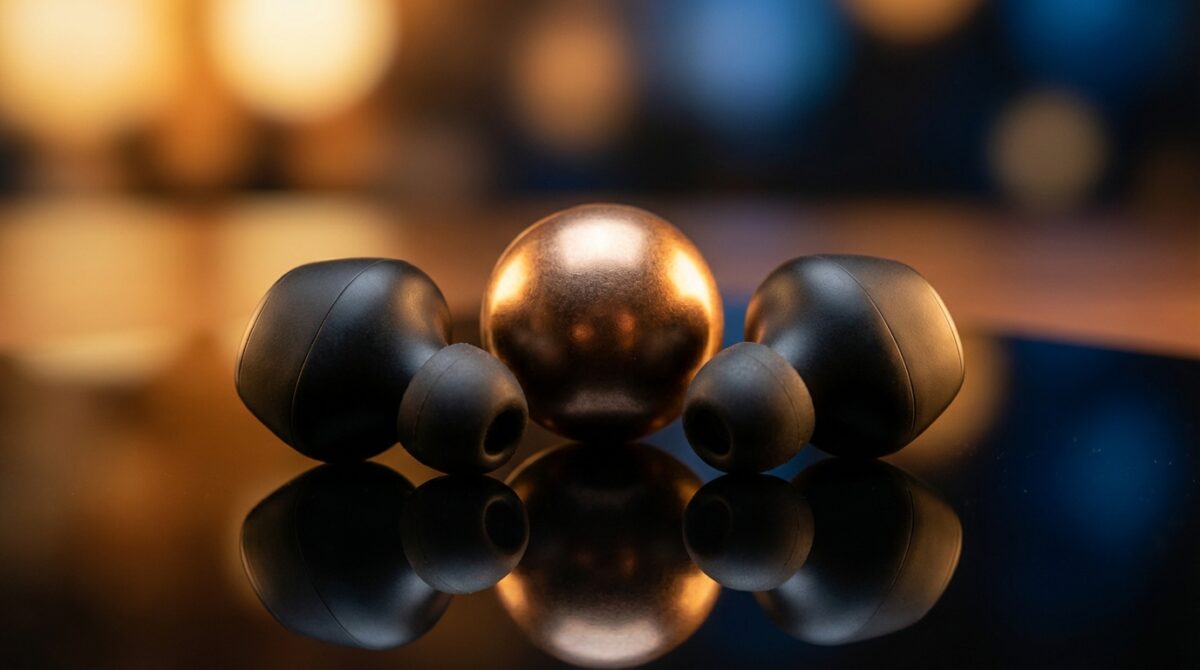

The night of the Super Bowl was already noisy when another storyline cut through the chatter: an alleged leaked OpenAI Ad, complete with futuristic earbuds and a mysterious shiny orb, spreading across feeds before the final whistle. Within hours, the clip went viral, only to be exposed as a carefully staged hoax that weaponized hype, brand trust, and the mechanics of online attention.

How the alleged leaked OpenAI Super Bowl ad exploded

The story did not start with a glossy broadcast spot during the Super Bowl, but with a supposed mistake on Reddit. A user claimed to be a frustrated OpenAI employee whose Ad had been pulled at the last minute, and in a moment of anger, they allegedly uploaded the full video. The post included a slick clip featuring Alexander Skarsgård, wraparound earbuds, and a gleaming shiny orb that looked like OpenAI’s first hardware experiment.

That narrative was irresistible: an insider meltdown, a lost multimillion-dollar campaign, and an unreleased product revealed in the most dramatic way possible. Screenshots of the thread started to travel on X, Discord channels, and private group chats. Many viewers accepted the “leaked” label instantly because it aligned with what they expected from a tech giant: high-end visuals, celebrity talent, and an enigmatic device that promised more than it explained.

HBO Max Set to Debut in the UK Next Month, Bringing Premium Streaming Content

Starting Next Month, Discord Will Ask for a Face Scan or ID to Unlock Full Features

The fake employee, the shiny orb and the illusion of authenticity

The Reddit account behind the claim, “wineheda,” appeared at first glance to be a plausible disgruntled staffer. On social platforms, a believable backstory often matters more than verifiable proof. However, archived traces showed that the same person had previously described themselves as a bookkeeper in Santa Monica trying to grow a small business, not a creative working on advanced OpenAI advertising with Jony Ive in the run-up to Super Bowl LX. That mismatch was a first sign that the alleged leak did not line up with reality.

The video itself leaned into familiar sci‑fi imagery. The earbuds wrapped elegantly around the ear, while the shiny orb sat in the frame like a desktop companion, suggesting a physical gateway to OpenAI’s software. No interface was shown in detail, yet the object invited speculation about ambient computing, private voice assistants, and seamless AI access. Hoaxers understood that hinting at these ideas would make the clip feel consistent with expectations about the next wave of AI hardware.

The multipronged hoax campaign behind the viral “reveal”

The Reddit post was only one piece of a broader operation. Whoever engineered the hoax treated it like a real product launch, planning touchpoints across platforms and targeting people who could amplify the story. The aim was not only to trick casual viewers but also to convince journalists, influencers, and advertising insiders that this was a genuine, pulled OpenAI Super Bowl Ad whose existence had slipped through corporate controls.

Tech commentator Max Weinbach shared screenshots of an email he received a week earlier, offering a payment of $1,146.12 to promote a tweet about an OpenAI hardware teaser featuring Alexander Skarsgård. That oddly precise amount hinted at a real media budget, framed to look like the type of paid amplification used by agencies during major events. The hoaxer did not rely on organic spread alone but tried to purchase initial visibility, giving the story an extra layer of perceived legitimacy.

Fake headlines, cloned sites, and the appearance of credibility

The campaign also tried to co‑opt established media brands. AdAge reporter Gillian Follett mentioned that a fabricated headline attributed to her began circulating, suggesting she had reported on OpenAI changing its Super Bowl strategy. At the same time, OpenAI’s chief marketing officer Kate Rouch flagged an entire fake website designed to mimic authoritative coverage about the alleged leaked Ad. For many readers, seeing a familiar layout and byline is enough to suspend doubt, even when the content was never actually published.

This layering of signals – the Reddit confession, the polished video, the outreach to tech figures, and the counterfeit trade‑press coverage – echoed real campaign rollouts. Modern hoaxes often borrow the rhythms of legitimate marketing. They use press-style language, plausible budgets, and even invented internal drama to convince you that you are glimpsing the hidden side of corporate decision-making, rather than a staged narrative written by outsiders.

OpenAI’s response and the quick dismantling of the hoax

Once the clip reached a certain level of visibility, OpenAI leaders stepped in publicly. President Greg Brockman responded on X, calling the story fake news, which immediately reframed the conversation. His intervention signaled that the company did not consider this to be a subtle teaser or playful misdirection. Soon after, spokesperson Lindsay McCallum Rémy stated clearly that the alleged leaked ad was “totally fabricated”, leaving little room for ambiguity or interpretation.

The speed and clarity of these responses mattered. When a false narrative involves potential hardware products, investors, developers, and partners watch closely. A rumored shiny orb device could influence expectations around OpenAI’s roadmap or affect perceptions of existing collaborations. By directly labeling the piece a hoax, the company reduced the risk of prolonged confusion and helped journalists distinguish between verified campaigns and opportunistic fabrications.

Why fast debunking has become a core brand skill

For a company like OpenAI, which sits at the center of AI policy debates, any Ad associated with its name is more than just marketing. A Super Bowl presence would carry signals about public positioning, safety messaging, and the role of AI in everyday life. A fake spot that shows unannounced earbuds and a shiny orb device suggests a hardware strategy that might not exist, potentially distracting from actual announcements or research updates.

Rapid debunking now forms part of brand governance. Public denial from senior leadership, confirmation from communications teams, and behind-the-scenes outreach to reporters create a consistent record. This incident illustrates how tech organizations must treat viral hoaxes almost like security incidents, with defined escalation paths. The lesson for your own company is clear: if your brand operates in a space prone to speculation, your crisis playbook should treat misinformation as predictable, not exceptional.

What this hoax reveals about AI hype and user psychology

The enthusiasm around the alleged leaked OpenAI Ad was not only about the Super Bowl, or even about Alexander Skarsgård’s involvement. It tapped into a deeper expectation that the next phase of AI will arrive as physical companions: earbuds always in your ears, and orbs glowing quietly on your desk. When viewers saw that combination, many felt the story was plausible without demanding technical details or verification.

Hype thrives on partial information. The clip implied that the earbuds and orb would integrate seamlessly with OpenAI systems, perhaps offering private AI conversations, context-aware assistance, or cross-device continuity. Those features were never described, yet the visuals encouraged viewers to fill the gaps. This pattern mirrors how concept cars or prototype phones create excitement years before shipping, except that in this case, the object did not exist at all.

Recognizing the triggers that make hoaxes believable

Several psychological levers appeared in this case. The “frustrated insider” felt authentic because people have seen real employees vent online after product or Ad cancellations. The presence of a well-known actor gave the clip cinematic weight. The timing – during the Super Bowl window, when advertising budgets spike – made a secret, pulled spot highly believable. Combined, these elements reduced skepticism.

For anyone working in communication or product strategy, this incident is a reminder that visual sophistication alone no longer distinguishes real content from fabricated narratives. You are better served by training your teams to recognize patterns such as anonymous insider claims, brand-new accounts, or unverifiable payment offers. Over time, those internal skills become as important as media buying when your brand operates under constant public scrutiny.

Practical checklist: How you can verify the next viral tech “reveal”

After this hoax, many observers blamed themselves for sharing the clip too quickly. Instead of treating it as a one‑off embarrassment, it is more useful to extract a repeatable process. Each time you encounter an alleged leaked Ad or hardware reveal, a short verification routine can dramatically reduce your risk of amplifying fiction that exploits your trust in familiar brands.

You can adapt the following list inside your team, whether you work in marketing, journalism, or product research. None of these steps require special tools; they rely on discipline and pattern recognition. Repeating them regularly trains your instinct for separating staged stories from legitimate information, especially during high‑noise moments like major sporting events or flagship conferences.

- Check the source account’s history and activity rather than focusing only on the viral post.

- Search for confirmation from official brand channels or named executives before accepting a “reveal.”

- Look for reputable reporters discussing the story; absence of coverage from specialized outlets is a warning sign.

- Be cautious when payment offers appear for posting about a leak or teaser without formal contracts.

- Use basic archive tools to see whether quoted headlines or articles ever existed on known media sites.

When you apply habits like these, even a polished clip showing futuristic earbuds and a glowing shiny orb becomes only the beginning of your evaluation, not the end. The alleged OpenAI Super Bowl ad hoax underlines how convincing deception can look and how valuable a structured response is for anyone trying to preserve credibility in a crowded information landscape.

Was the alleged leaked OpenAI Super Bowl ad real?

No. OpenAI leadership and spokespeople publicly stated that the supposed Super Bowl ad featuring earbuds and a shiny orb was fabricated. The video, the Reddit backstory, and the supporting material formed a coordinated hoax rather than an actual pulled campaign.

Did OpenAI plan to launch hardware like the earbuds and shiny orb shown?

There is no verified evidence that OpenAI planned to launch the specific hardware depicted in the hoax video. The clip used plausible design cues but was not connected to an announced product strategy, according to public statements from the company.

How did investigators discover that the Reddit user was not an OpenAI employee?

Archived records linked the Reddit account to a different professional background, including previous posts about working as a bookkeeper in Santa Monica. That history conflicted with the claimed role on an OpenAI and Jony Ive Super Bowl ad, casting doubt on the insider story.

Why did the hoax include fake headlines and a cloned news site?

The Wayback Machine Launches Innovative Plugin to Repair the Internet’s Broken Links

SpaceX Shifts Gears: Prioritizing Lunar Base Development Ahead of Mars Missions

The hoaxers attempted to borrow credibility from established media. By forging headlines attributed to advertising reporters and recreating a trade publication’s visual style, they tried to convince readers that trusted outlets had confirmed the existence of the pulled ad.

How can I avoid spreading similar advertising hoaxes in the future?

Before sharing, examine the original source, search for confirmation from official channels, and look for coverage by recognized tech or advertising journalists. If payment is offered to promote unverified leaks, treat that as a strong warning sign and pause amplification until details are validated.